Artificial intelligence (AI) is undeniably reshaping the fabric of our world by revolutionizing industries and redefining the way we approach problem-solving. As AI technologies continue to advance, their potential applications in scientific research, particularly in materials science, are becoming increasingly apparent [1].

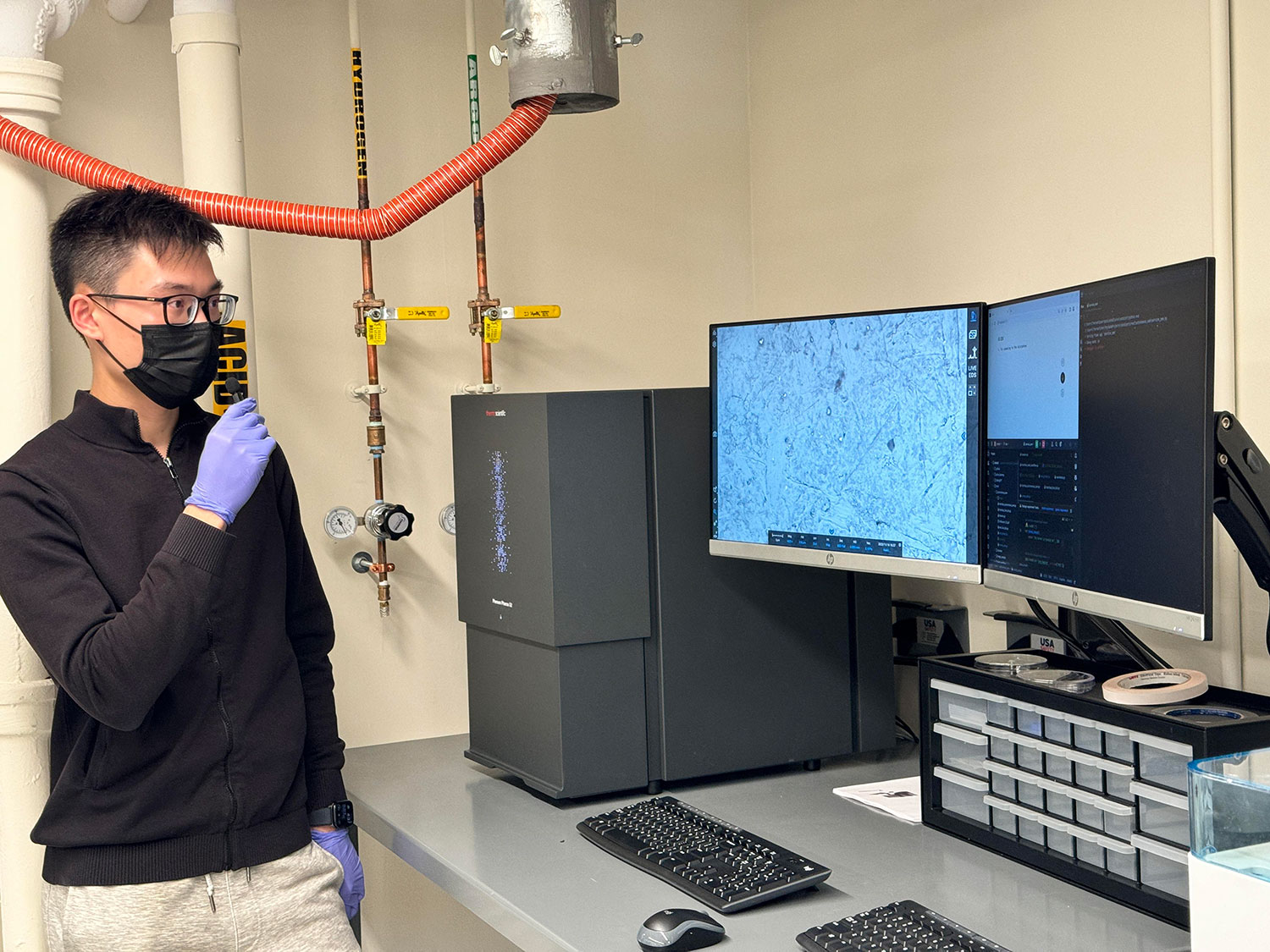

Meet Zhichu Ren, a doctoral candidate at Massachusetts Institute of Technology (MIT) pursuing a Ph.D. in Materials Science and Engineering in the lab of Prof. Ju Li. Part of Zhichu’s work aims to fuel a paradigm shift in the way experimental research is conducted, namely by making the concept of the autonomous laboratory [2] a reality. Working under the guidance of his graduate research advisor, the realization of that vision is now more imminent. CRESt (Copilot for Real-world Experimental Scientists) is a platform being developed by Zhichu and his colleagues to empower researchers with its ability to autonomously operate laboratory equipment.

One of CRESt’s many capabilities is its ability to operate the Phenom Pharos Desktop scanning electron microscope (SEM) simply by voice commands.

What is CRESt and why was it created?

In an autonomous laboratory, the integration of AI with robotics is leveraged to create a self-sufficient and self-optimizing environment. Experimental processes such as data collection and analysis are conducted with minimal human intervention, allowing for continuous and efficient operation. However, such systems need to be operated using scripting languages like Python, which restricts their usage among experimental researchers. Recognizing this barrier, Zhichu and his colleagues set out to develop a voice-activated AI interface so that anyone, regardless of coding experience, could be empowered by the autonomous laboratory.

Zhichu credits the recent release of the ChatGPT API function calling feature for opening up the possibility of a voice-activated platform. ChatGPT is based on a large language model (LLM) developed by OpenAI, which is designed to understand and generate human-like text based on the input it receives. This includes assembling and executing Python code. The user simply tells CRESt what they want to accomplish and on the back end, ChatGPT activates suitable subroutines from a function pool that controls various instruments like an electron microscope.

Autonomous SEM in action

“In the near future, AI will become the ‘professional videographer’ for scientific researchers,” shares Zhichu. A prime example of this is the autonomous SEM module he developed for CRESt. Being one of the most used characterization instruments in materials science research, SEM incidentally has a high learning curve. Mastering the technique can take hours, if not weeks, of training and regular use in a practical environment to learn how to apply it to one’s projects. He states that “in the upcoming AI era, these learning costs might become unnecessary.”

In the demonstration video published to his research group’s YouTube channel, the CRESt Autonomous SEM workflow is shown being applied to visualize phase boundaries in steel using a Phenom Pharos Desktop SEM. The researcher only requested an image of “the interface between austenite and martensite phases at a field width of 80 micrometers” in natural language, without any further intervention. After a series of exploration steps, the AI successfully controlled the Pharos SEM to the right location, zoomed in, and delivered a satisfactory SEM image.

While Zhichu notes that the tool still has significant room for improvement, he shares how impressed he was with the preliminary results when tested on the steel sample:

“I was surprised when I realized that the AI could understand my stated objective and locate the martensite.”

In other words, without any additional information other than the mere mention of the term, CRESt was able to identify most martensite phase regions without any prior training of the algorithm (something that is crucial in developing accurate machine vision models). The closed-loop behavior of the platform is facilitated by passing the SEM images obtained to a vision-capable agent which analyzes the images through Set-of-Mark (SoM) protocols and provides next-step operational suggestions back to the SEM, such as moving the field of view, zooming in, or re-focusing. The iteration continues until the vision agent concludes that the existing SEM image meets the user’s requirements.

Working with the Phenom Pharos Desktop SEM

One of the major deciding factors in selecting the Phenom Pharos Desktop SEM for the project is its ability to be automated:

“Both its excellent user interface and ability to be operated entirely through Python scripting languages made it the ideal candidate for developing the Autonomous SEM module in CRESt.”

– Zhichu Ren

All Phenom models benefit from Python compatibility, which offers users the freedom to build customized workflows by leveraging the expansive list of subroutines within the PyPhenom library, accessible through the Python Programming Interface (PPI). Since the GPT-4 model forms the core of the architecture of the Autonomous SEM platform, it was a critical requirement that the SEM could be communicated with using Python and thus allowed for seamless integration.

Besides automation, Zhichu’s entire research group is benefiting from the excellent resolution capability and compact size of the Phenom Pharos. Being the only desktop SEM available on the market with a field-emission gun (FEG), the instrument delivers performance on par with most floor-model SEMs. The microscope was easily installed within the existing laboratory space that his research group (http://Li.mit.edu) uses and all users, about 10 of them, give it a thumbs up. According to his colleagues who get regular use of the microscope for their various projects, they appreciate having an “on-demand” SEM that produces data much faster than if they were to solely rely on the shared resources available to them through the university.

Looking Forward to the Autonomous Laboratory

The prospect of streamlined workflows and increased efficiency through automation promises to revolutionize the way we conduct experiments. Thanks to Zhichu Ren and his collaborators, this new frontier is already being explored through the creation of CRESt. Serving as an AI-based laboratory coordinator, researchers simply speak to the tool about what experiments they want to run or data they want to obtain from a particular instrument. CRESt then initiates automation systems through Python scripts to carry out practical experiments.

As noted in their recent publication [3], CRESt is actively being developed to assist researchers in three primary modalities:

Instrument coach:

CRESt will automatically find the optimal parameter settings to perform the task at hand, much like the demonstration of the Autonomous SEM agent being used to image phase boundaries in steel.

Root-cause diagnostics:

When irreproducible results are encountered, CRESt can leverage its hypothesis-generating capabilities applied to large datasets (e.g., instrument logs, images, experimental data) to propose a list of potential causes for a human expert to further investigate.

Scientific writing:

Pattern-matching skills inherent to LLMs can be applied to analyzing scientific data through CRESt to help scientists interpret their results, which overall will make the scientific writing process more efficient.

About the Li Group at MIT

The Li Group is led by Ju Li, Battelle Energy Alliance Professor in Nuclear Engineering and Professor of Materials Science and Engineering at MIT. His group (http://Li.mit.edu) performs computational and experimental research on energy materials and systems.

References

[1] D. Morgan, G. Pilania, A. Couet, B.P. Uberuaga, C. Sun and J. Li, “Machine learning in nuclear materials research,” Current Opinion in Solid State and Materials Science 26 (2022) 100975. https://doi.org/10.1016/j.cossms.2021.100975

[2] Z-C. Ren, Z-K. Ren, Z. Zhang, T. Buonassisi and J. Li, “Autonomous experiments using active learning and AI,” Nature Reviews Materials 8 (2023) 563564. https://doi.org/10.1038/s41578-023-00588-4

[3] Ren Z, Zhang Z, Tian Y, Li J. ” CRESt – Copilot for Real-world Experimental Scientist,” ChemRxiv (2023, Preprint). https://doi.org/10.26434/chemrxiv-2023-tnz1x-v4